MicroVQA: A Multimodal Reasoning Benchmark for Microscopy-Based Scientific Research

James Burgess*, Jeffrey J Nirschl*, Laura Bravo-Sánchez*, Alejandro Lozano, Sanket Rajan Gupte, Jesus G. Galaz-Montoya, Yuhui Zhang, Yuchang Su, Disha Bhowmik, Zachary Coman, Sarina M. Hasan, Alexandra Johannesson, William D. Leineweber, Malvika G Nair, Ridhi Yarlagadda, Connor Zuraski, Wah Chiu, Sarah Cohen, Jan N. Hansen, Manuel D Leonetti, Chad Liu, Emma Lundberg, Serena Yeung-Levy

*co-first authorship

CVPR 2025

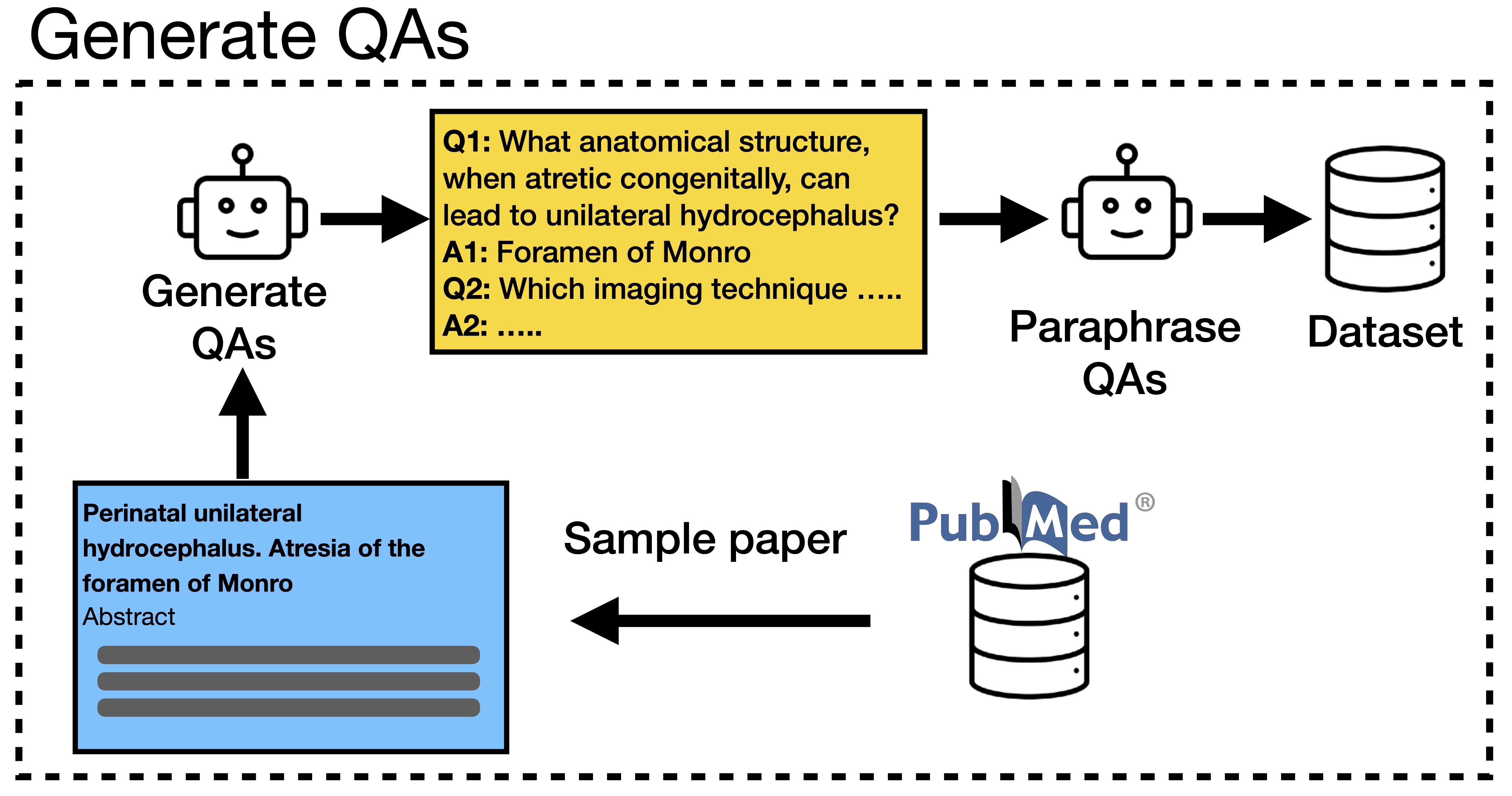

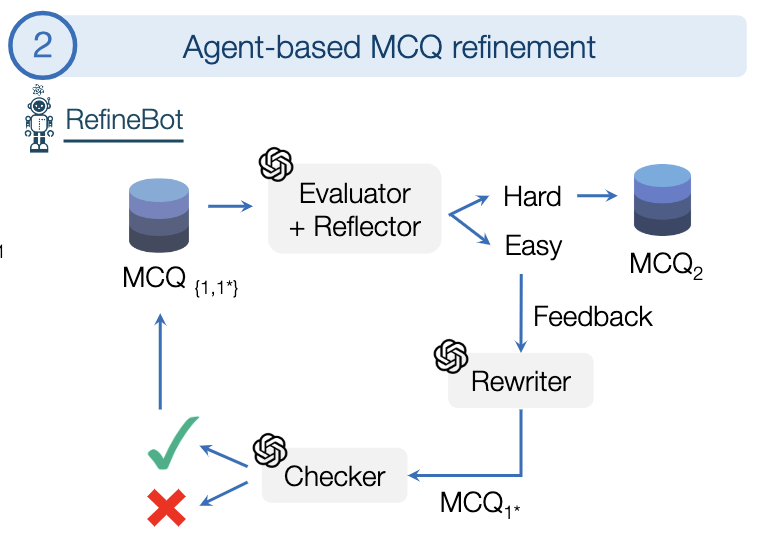

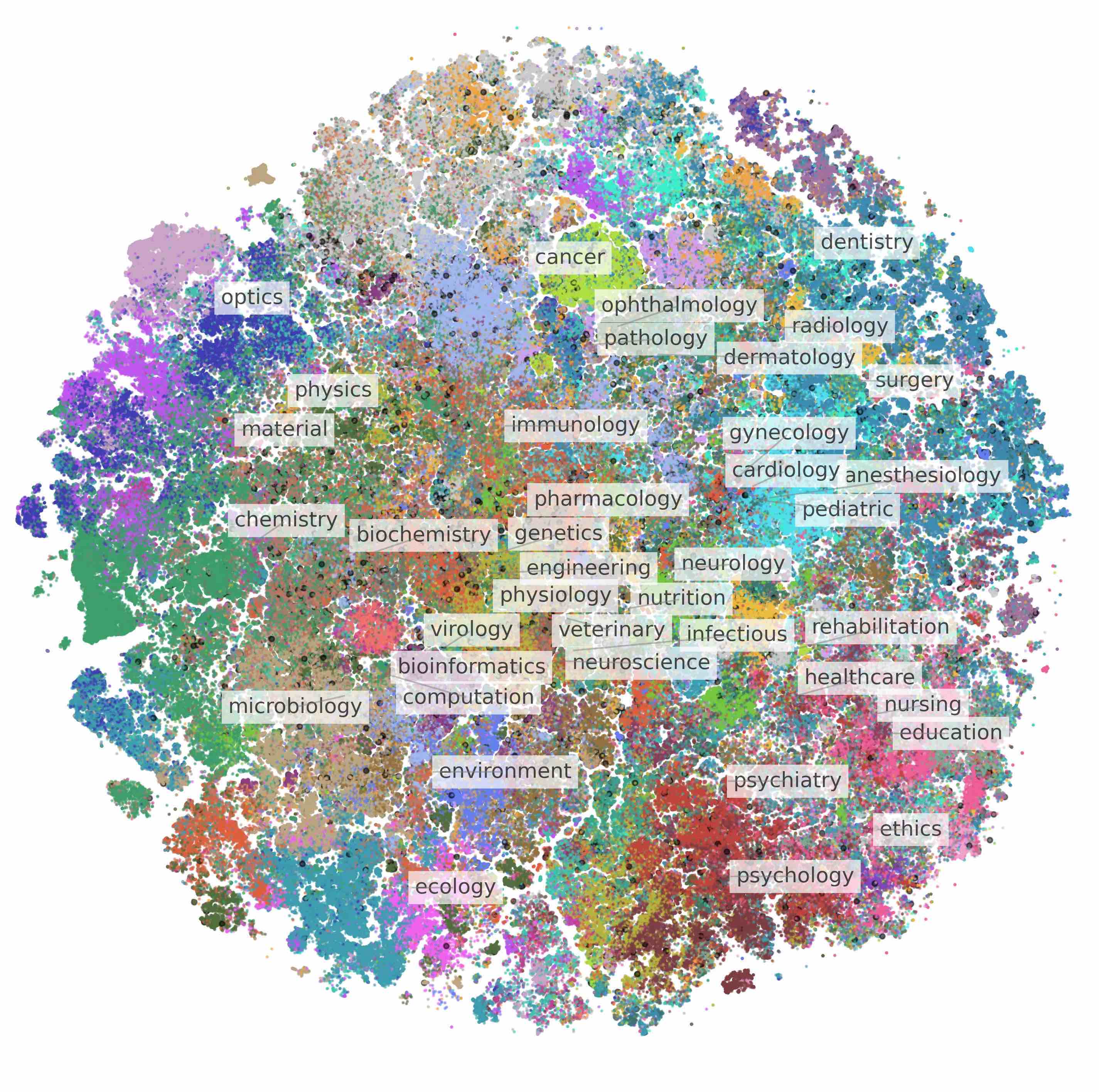

MicroVQA is an expert-curated benchmark for research-level reasoning in biological microscopy. We also propose a method called RefineBot for removing language shortcuts from multiple-choice VQA.